Trusted By

AI Development Services for Your Next Big Idea

End‑to‑end AI development services—covering discovery, data engineering, model development (ML, NLP, computer vision, LLMs), MLOps, and secure deployment—to automate workflows, improve decision‑making, and unlock measurable ROI.

Stakeholder workshops, value mapping, and feasibility scoring to identify high‑ROI AI opportunities. We define KPIs/OKRs, build the business case (TCO/ROI), outline change‑management needs, and produce a delivery roadmap that de‑risks execution.

Ingest, clean, and transform data with robust ETL/ELT for batch and streaming (Kafka/Spark). Implement feature stores, data quality checks, lineage, and governance (lakehouse patterns) to make data AI‑ready and compliant.

Supervised, unsupervised, and time‑series models for classification, regression, clustering, anomaly detection, and optimization. Feature engineering, hyperparameter tuning, explainability (SHAP/LIME), and A/B testing to ensure reliable performance.

Deploy GPT‑class models with retrieval‑augmented generation (RAG), vector databases (Pinecone/FAISS/Weaviate), prompt engineering, and fine‑tuning. Add guardrails, tool‑use/agents, and evaluation to reduce hallucinations and keep outputs safe and accurate.

End‑to‑end NLP: NER, sentiment, topic modeling, summarization, semantic search, and document understanding (OCR). Multilingual pipelines accelerate knowledge discovery, compliance reviews, and customer insight extraction.

Image/video classification, detection, segmentation, tracking, and OCR for inspection, safety, and automation. Optimize for edge and cloud (ONNX/TensorRT) to boost accuracy and throughput in production environments.

Intelligent automation with LLM copilots, autonomous agents, and decision engines. Orchestrate APIs, RPA, and BPM workflows to eliminate repetitive tasks, cut handling time, and improve SLA adherence.

Personalization (next‑best‑action, cross‑sell/upsell) and time‑series forecasting (ARIMA/Prophet/DeepAR) for demand, inventory, and pricing. Increase conversions, reduce stockouts, and improve revenue predictability.

Production MLOps with CI/CD pipelines, model registry, and feature store (MLflow/Kubeflow/Vertex AI/SageMaker). Monitoring for performance, drift, and bias; human‑in‑the‑loop review; governance and audit trails for regulated workloads.

Secure, scalable deployments on AWS, Azure, and GCP using containers, serverless, and Kubernetes. Support for private networking/VPC, zero‑trust patterns, and air‑gapped or on‑prem installations where required.

Privacy‑by‑design for PII/PHI with encryption in transit/at rest, secrets management, RBAC/SSO, and least‑privilege access. Align with SOC 2, ISO 27001, HIPAA, and GDPR—including DPAs, retention, and data residency.

Enablement and change management with playbooks, runbooks, and documentation. Structured handover, KT sessions, and SLAs/SLOs for reliable operations—plus retainer‑based L2/L3 support and incident response.

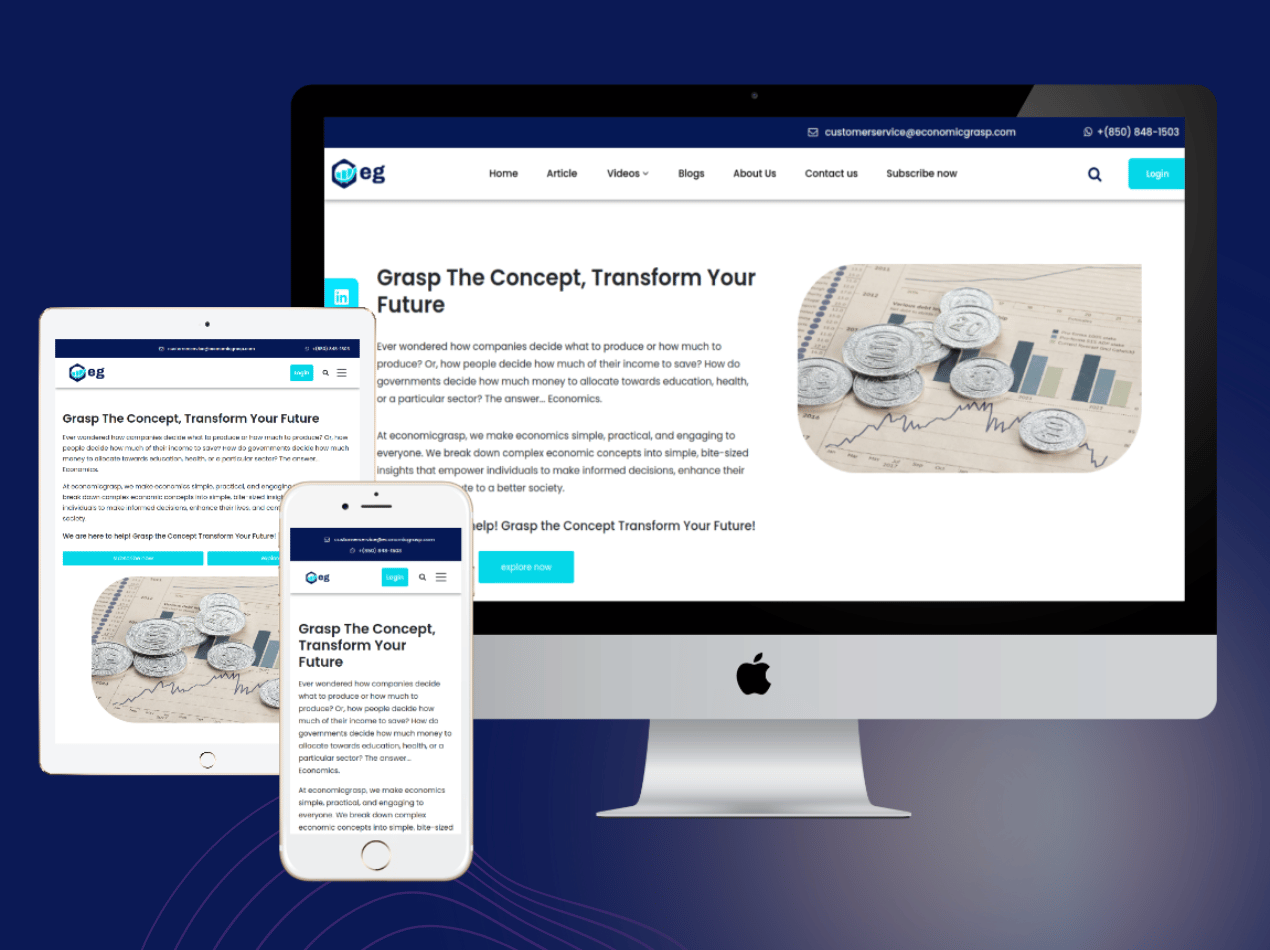

Our Best Projects

Projects we have worked on

Economic Grasp Web App

E-LearningTechnologies We Use

Everything You Need to Know About Our Software Development and Digital Services

Your Questions, Our Expert Answers.

Our Work Speaks ❤️ But Our Clients Say It Best

Real Feedback from Businesses Who Trust TurtleSoft for Their Software & IT Needs

© Doob 2026